- May 02, 2017

NodeWeaver takes advantage of an extremely sophisticated and flexible storage layer, based largely on the LizardFS distributed filesystem, and capable of performing reliably and in an extremely efficient way even for small number of nodes and disk drives. There are several important differences between our approach to storage and the most common choices taken in other hyper-converged infrastructures, or even in dedicated storage systems; this post will try to outline some of them.

SINGLE STORAGE POOL

NodeWeaver uses an approach called “sea of data”, where each node contributes its own capacity to a single, large and undifferentiated pool of blocks, called “chunks”. Files, snapshots, each object in NodeWeaver is composed of these chunks; each time the orchestrator requests the creation of a new image, the filesystem creates the new chunks and subtracts the allocated blocks from the overall capacity, while the opposite happens when an virtual machine image is destroyed or released. There is no need to create separate silos, LUNs or other allocation abstractions-everything sits in a single space, and allocation and deallocation is transparent. This greatly simplify management, prevents over-allocation or space shortage, and the painful movement of space from one silo to another.

GOALS

Each chunk has a special property, called “goal”, that express the number of active, safe replicas that each block must have. Files have the same property – that applies to all the chunks that compose the file itself. For example, a virtual machine image with goal of 2 means that the overall system must have at least 2 copies of all the chunks that compose the file, and thus can tolerate the loss of a copy with no impact on availability. If something happens that changes the measured availability of the storage (for example, one in a group of three nodes dies) the system automatically will try to restore the planned goal, replicating the safe chunks within the free space that is still available. If, conversely, the opposite happens (for example, the died node is restarted and behaves correctly) suddendly there will be more copies of chunk within the system, and these “overgoal” chunks will be cleaned to restore space.

This means that it is possible to decide-VM by VM-how much replication is needed. An image, like an ISO downloadable from the internet, may be saved at goal 1 since it is easily replaceable, while the files that compose the company ERP may require the highest level of replication to ensure continuous operation. There is no need to lose space unnecessarily, and it is even possible to move goals after the files are created, for example when a VM used for testing is moved in production. This means that NodeWeaver is extremely efficient in its space management, and a NW110 appliance (with two rotational 2TB disks plus two SSD accelerators) will have 2TB of fully available space at goal 2. Other hyperconverged systems will have much less available space; for example, the EMC VSPEX Blue appliance has only 23.9% of the storage space available, while a NW110 node has 45% at goal 2-the same protection level. (data from Vaughn Stewart, “Hyper-Converged Infrastructures are not Storage Arrays“); the granularity of NodeWeaver allows to balance the storage and reliability in a simple and efficient way, reducing the loss of space common in other storage platforms.

SNAPSHOTS

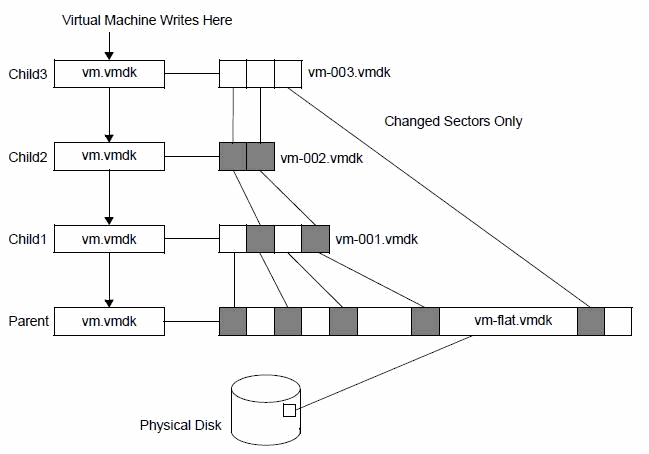

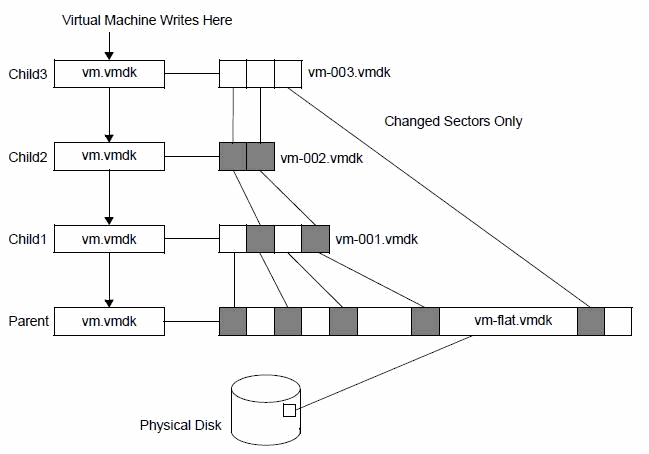

Most virtualization and storage system use a technology commonly called “copy-on-write”. When “copy-on-write” snapshots are first created, only the metadata about where the original data is stored is copied, no physical copy happens when the snapshot is created. Therefore, the creation of the snapshot is extremely fast. As blocks on the original image change, the original data is copied (moved over) into a pre-allocate space set aside for snapshots prior to the original data being overwritten. The original data blocks are copied just once at the first write request after the snapshot was taken. When a snapshot of a snapshot is taken, a “snapshot chains” is created, and the system tracks changes and allocations across all the images that are connected together:

The longer the chain, the highest the overhead of going through all the images, check which blocks need to be changed and managing the allocation and deallocation of space.

NodeWeaver uses a structurally different approach, called Redirect-on-Write. Instead of copying the blocks on the first write, the previous chunks are preserved, and will be removed only when there is no single file that refers to them; reducing overhead substantially. In fact, for each block of data changed in the copy-on-write process there is a read and two writes, compared to a single write for replace-on-write as implemented in NodeWeaver. The Redirect-on-Write approach is not only faster, but the speed is constant, independently of how many snapshots are taken; this means that in high duplication cases (like VDI thin provisioning) our platform has a minimal loss of performance even for very large number of virtual machines when compared to alternative platforms.